*UPDATE* – Arthur Gould has informed me, as of CS6, Photoshop does support multiple layers and other basic tools in 32 bit mode. Converting your image to 32 bit for editing is currently the recommended workflow.

An Industry Standard

Anyone investigating sRGB color space inconsistencies¹ long enough will eventually reach the ultimate bit of irony. Photoshop (the

industry standard image manipulation tool) handles all of its blending math completely wrong. This is actually well known among graphics professionals, but the issue

is slightly technical, so the vast majority of Photoshop users don’t understand the implications.

The Tests

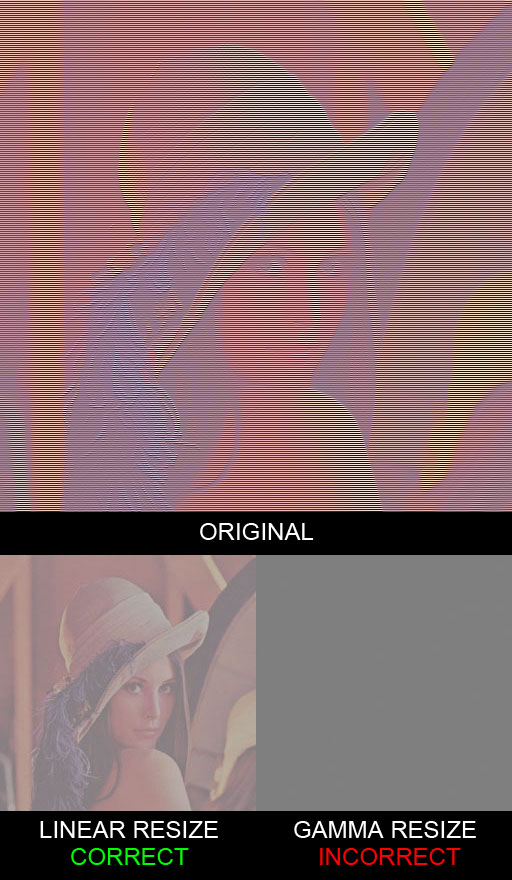

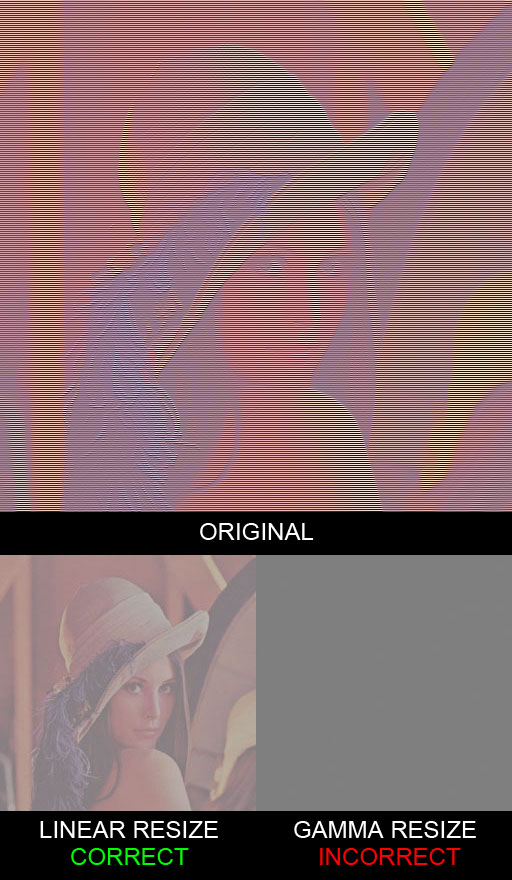

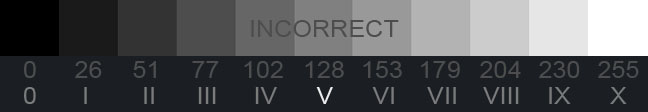

I have constructed an image that Photoshop fails miserably at downsizing². Instead of producing a smaller version of the image, Photoshop just outputs gray.

The image on the left is the correct result, the image on the right is Photoshop’s output. If you don’t believe me, try it out yourself. Load this image into Photoshop and downsize it by 50%. See? Now, what exactly is going on?

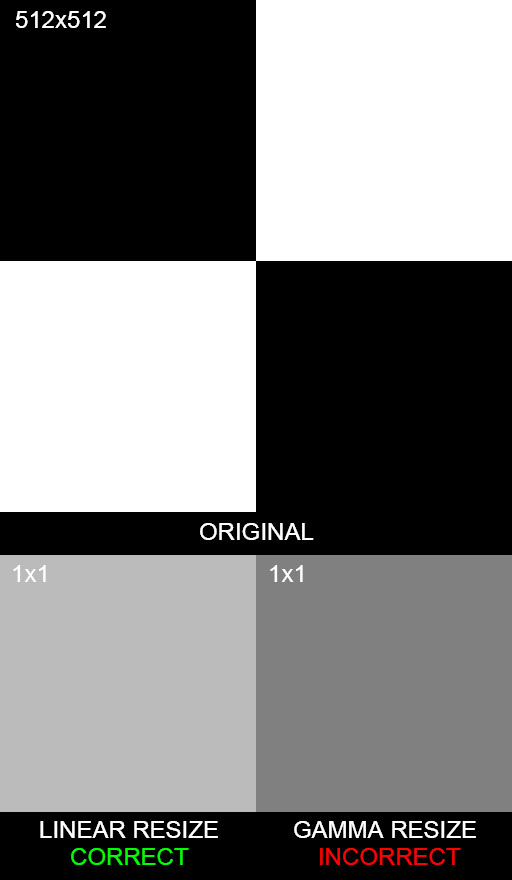

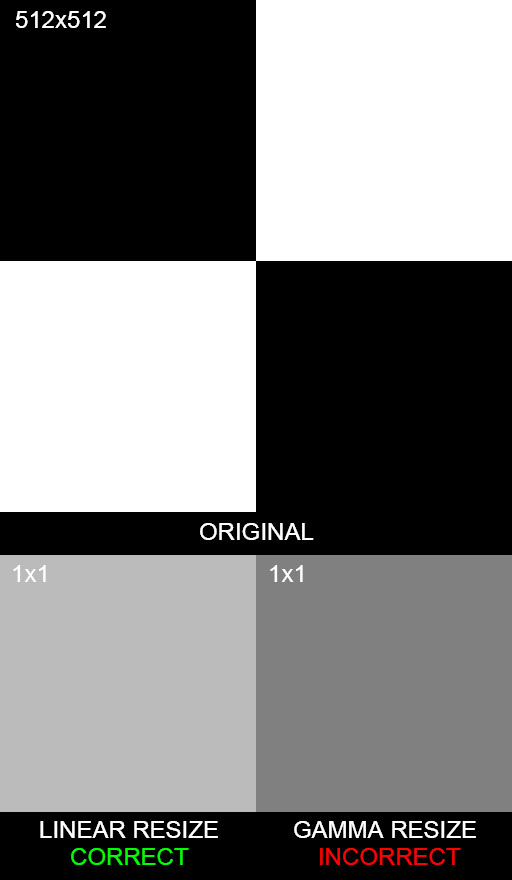

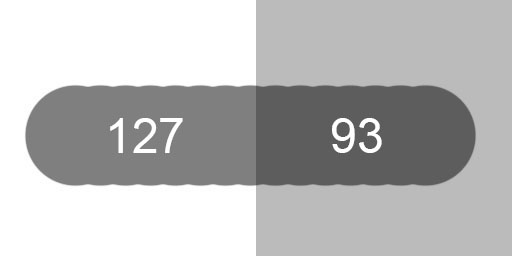

If we take a half black, half white image and resize it down to a 1×1 pixel, we would expect to get a value halfway between the two, the average.

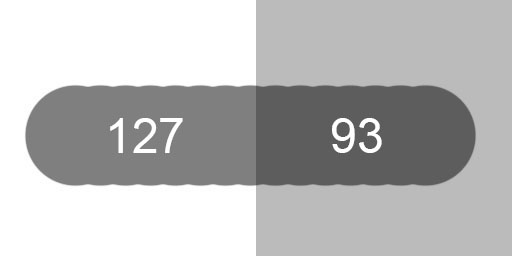

What Photoshop gives us as halfway between (0,0,0) and (255,255,255) is (128,128,128). This answer is wrong. Remember, these numbers are encoded in sRGB space. If we were to properly decode the numbers to linear space, take the average, and re-encode them, we would find the correct result to be (187,187,187). Photoshop gives us an image that is considerably darker than it should be.

Yes, that’s correct folks, Photoshop can’t resize images correctly.

For the painters in the crowd, what would happen if we took a black brush with 50% opacity and used it on a white background? Will we really get 50% (187)? How about if we used the same brush on a 50% background, will we get 25% (136)?

No, and no, we don’t get the correct values, which means that the brushes themselves are applied non-linearly. Photoshop brushes react very differently to opacity than what we would expect in a gamma correct editor.

Layer blending modes and filter effects also have the same problem. Everything about Photoshop’s blending is broken. We can blame Adobe for overlooking such a serious issue, but hey, most of us never even noticed!

A Partial Solution

For a limited set of cases, we can devise a way to workaround “Photoshop Math™”.

As it turns out, the 32 bit color mode is not in sRGB space because that particular format is intended for use with HDR images and sRGB is only valid in the 0-1 range. HDR images need to extend beyond that. If we want to properly resize an image, we can convert our image’s mode to 32 bit, do our resize, then convert back to 8 bit (using “Exposure and Gamma” with Exposure = 0 and Gamma = 1) . Of course, we can’t use this trick for much more than resizing because Photoshop is completely gimped in 32 bit mode.

*UPDATE* – as stated above, CS6 and beyond has a (mostly) functional 32 bit mode. This is the recommended workflow.

Photoshop actually has the ability to do layer blending in linear space. To turn this “feature” on we need to hit the checkbox labeled “Blend RGB Colors Using Gamma 1.00” in the Edit->Color Settings dialog. In my version, I have to hit “More Options” to see the toggle. Why isn’t this the default setting for layer blending?

While you’re there, change your Gray Working Space to “sGray”, as the default “Dot Gain 20%” is designed for print work³ (and who does that anymore?).

If we want to do anything other than resize or blend layers, we’re out of luck. We can’t even do both in the same file since 32 bit mode doesn’t support layers. Jeez!

*UPDATE* – Again, as of CS6, there is support for multiple layers in 32 bit mode.

Where Do We Go From Here?

Is there an alternative to Photoshop? I’m not so sure. I’ve tried a few other image editors out there and they’ve all had similar problems. Will Adobe fix this? I doubt it. The issue has been known for so long, I’m starting to think they

can’t fix it for one reason or another.

If someone is looking to eat Adobe’s lunch, here’s your chance. Give us an image editor with correct linear blending. And while you’re at it, throw in a functional 32 bit mode.

¹ For a better understanding of sRGB and linear color space conversion, I refer you to John Hable’s presentation.

² Inspired by Eric Brasseur’s technique. I’ve uploaded my source code to produce the image here.

³ http://retrofist.com/sgray/

![Rendered by QuickLaTeX.com \[\begin{split} \left( \begin{array}{c} \left[ \begin{array}{ccc} a_x & a_y & a_z \end{array} \right] \\ \left[ \begin{array}{ccc} b_x & b_y & b_z \end{array} \right] \\ \left[ \begin{array}{ccc} c_x & c_y & c_z \end{array} \right] \end{array} \right)& \textit{ row vectors}\\ \left( \begin{array}{ccc} \left[ \begin{array}{c} a_x \\ b_x \\ c_x \end{array} \right] & \left[ \begin{array}{c} a_y \\ b_y \\ c_y \end{array} \right] & \left[ \begin{array}{c} a_z \\ b_z \\ c_z \end{array} \right] \end{array} \right)& \textit{ column vectors}\\ \\ \end{split}\]](http://www.kiransprojects.com/blog/wp-content/ql-cache/quicklatex.com-447c38bb2bf0f0ca50c8ff702b37c0cc_l3.png)

![Rendered by QuickLaTeX.com \[ \left( \begin{array}{c} \left[ \begin{array}{ccc} \boldsymbol{A_x} & \boldsymbol{A_y} & \boldsymbol{A_z} \end{array} \right] \\ \left[ \begin{array}{ccc} b_x & b_y & b_z \end{array} \right] \\ \left[ \begin{array}{ccc} c_x & c_y & c_z \end{array} \right] \end{array} \right) \]](http://www.kiransprojects.com/blog/wp-content/ql-cache/quicklatex.com-9efbd4cb5a424935c000b4f3eb66248f_l3.png)

![Rendered by QuickLaTeX.com \[\begin{split} \left[\begin{array}{ccccccccc} \boldsymbol{A_x} & \boldsymbol{A_y} & \boldsymbol{A_z} & b_x & b_y & b_z & c_x & c_y & c_z \end{array}\right]& \textit{ row major}\\ \left[\begin{array}{ccccccccc} \boldsymbol{A_x} & b_x & c_x & \boldsymbol{A_y} & b_y & c_y & \boldsymbol{A_z} & b_z & c_z \end{array}\right]& \textit{ column major} \end{split}\]](http://www.kiransprojects.com/blog/wp-content/ql-cache/quicklatex.com-73b5de1d7b13c019e7b61da9f1c061f9_l3.png)